环境以及版本

- CentOS7

- DataSphere Studio1.1.0

- Jdk-8

- Hadoop2.7.2

- Hive2.3.3

- Spark2.4.3

- MySQL5.5

基础环境准备

基础软件安装

sudo yum install -y telnet tar sed dos2unix mysql unzip zip expect python java-1.8.0-openjdk java-1.8.0-openjdk-devel

nginx安装特殊一些,不在默认的 yum 源中,可以使用 epel 或者官网的 yum 源,本例使用官网的 yum 源

sudo rpm -ivh http://nginx.org/packages/centos/7/noarch/RPMS/nginx-release-centos-7-0.el7.ngx.noarch.rpm

sudo yum install -y nginx

sudo systemctl enable nginx

sudo systemctl start nginx

MySQL (5.5+); JDK (1.8.0_141以上); Python(2.x和3.x都支持); Nginx

特别注意MySQL和JDK版本,否则后面启动会有问题

Hadoop安装

采用官方安装包安装,要求Hadoop版本对应如下

Hadoop(2.7.2,Hadoop其他版本需自行编译Linkis) ,安装的机器必须支持执行 hdfs dfs -ls / 命令

安装步骤,创建用户

sudo useradd hadoop

修改hadoop用户,切换到root帐号,编辑/etc/sudoers(可以使用visudo或者用vi,不过vi要强制保存才可以),添加下面内容到文件最下方

hadoop ALL=(ALL) NOPASSWD: NOPASSWD: ALL

切换回hadoop用户,解压缩安装包

su hadoop

tar xvf hadoop-2.7.2.tar.gz

sudo mkdir -p /opt/hadoop

sudo mv hadoop-2.7.2 /opt/hadoop/

配置环境变量

sudo vim /etc/profile

添加如下内容(偷下懒,把后面的Hive和Spark环境变量也一同配置好了)

export HADOOP_HOME=/opt/hadoop/hadoop-2.7.2

export HIVE_CONF_DIR=/opt/hive/apache-hive-2.3.3-bin/conf

export HIVE_AUX_JARS_PATH=/opt/hive/apache-hive-2.3.3-bin/lib

export HIVE_HOME=/opt/hive/apache-hive-2.3.3-bin

export SPARK_HOME=/opt/spark/spark-2.4.3-bin-without-hadoop

export HADOOP_CONF_DIR=/opt/hadoop/hadoop-2.7.2/etc/hadoop

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.342.b07-1.el7_9.x86_64

export PATH=$JAVA_HOME/bin:$PATH:$HADOOP_HOME/bin:$HIVE_HOME/bin:$SPARK_HOME/bin

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

使配置生效

source /etc/profile

配置免密登录,过程是先生成公私钥,再把公钥拷贝到对应的帐号下

ssh-keygen

ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop@127.0.0.1

配置成功后,测试下是否成功,如果不需要输入密码,证明配置成功。

ssh localhost

添加hosts解析

sudo vi /etc/hosts

修改后

192.168.1.211 localhost

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

127.0.0.1 namenode

配置Hadoop

mkdir -p /opt/hadoop/hadoop-2.7.2/hadoopinfra/hdfs/namenode

mkdir -p /opt/hadoop/hadoop-2.7.2/hadoopinfra/hdfs/datanode

vi /opt/hadoop/hadoop-2.7.2/etc/hadoop/core-site.xml

core-site.xml修改如下

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- 指定HDFS中NameNode的地址 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://127.0.0.1:9000</value>

</property>

<!-- 指定Hadoop运行时产生文件的存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop/hadoop-2.7.2/data/tmp</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

</configuration>

修改Hadoop的hdfs目录配置

vi /opt/hadoop/hadoop-2.7.2/etc/hadoop/hdfs-site.xml

hdfs-site.xml修改如下

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>/opt/hadoop/hadoop-2.7.2/hadoopinfra/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>/opt/hadoop/hadoop-2.7.2/hadoopinfra/hdfs/datanode</value>

</property>

</configuration>

修改Hadoop的yarn配置

vi /opt/hadoop/hadoop-2.7.2/etc/hadoop/yarn-site.xml

yarn-site.xml修改如下

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

<description>Whether virtual memory limits will be enforced for containers</description>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>4</value>

<description>Ratio between virtual memory to physical memory when setting memory limits for containers</description>

</property>

</configuration>

修改mapred

cp /opt/hadoop/hadoop-2.7.2/etc/hadoop/mapred-site.xml.template /opt/hadoop/hadoop-2.7.2/etc/hadoop/mapred-site.xml

vi /opt/hadoop/hadoop-2.7.2/etc/hadoop/mapred-site.xml

mapred-site.xml修改如下

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

修改Hadoop环境配置文件

vi /opt/hadoop/hadoop-2.7.2/etc/hadoop/hadoop-env.sh

修改JAVA_HOME

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.342.b07-1.el7_9.x86_64/

初始化hadoop

hdfs namenode -format

/opt/hadoop/hadoop-2.7.2/sbin/start-dfs.sh

/opt/hadoop/hadoop-2.7.2/sbin/start-yarn.sh

临时关闭防火墙

sudo systemctl stop firewalld

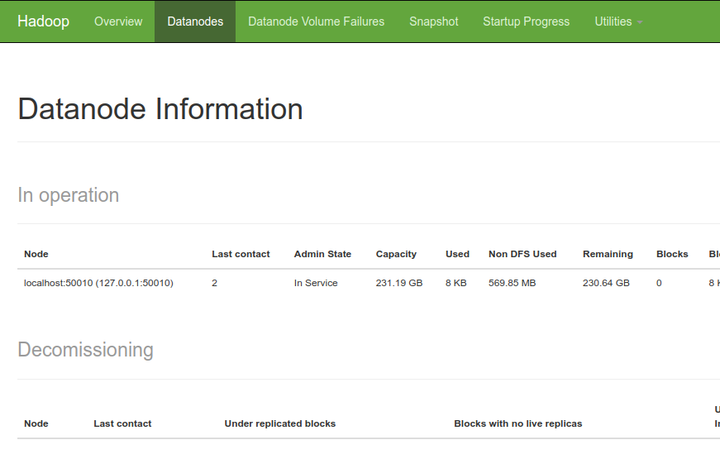

浏览器访问Hadoop

访问hadoop的默认端口号为50070

Hive安装

采用官方安装包安装,要求Hive版本对应如下

Hive(2.3.3,Hive其他版本需自行编译Linkis),安装的机器必须支持执行hive -e "show databases"命令

tar xvf apache-hive-2.3.3-bin.tar.gz

sudo mkdir -p /opt/hive

sudo mv apache-hive-2.3.3-bin /opt/hive/

修改配置文件

cd /opt/hive/apache-hive-2.3.3-bin/conf/

sudo cp hive-env.sh.template hive-env.sh

sudo cp hive-default.xml.template hive-site.xml

sudo cp hive-log4j2.properties.template hive-log4j2.properties

sudo cp hive-exec-log4j2.properties.template hive-exec-log4j2.properties

在Hadoop中创建文件夹并设置权限

hadoop fs -mkdir -p /data/hive/warehouse

hadoop fs -mkdir /data/hive/tmp

hadoop fs -mkdir /data/hive/log

hadoop fs -chmod -R 777 /data/hive/warehouse

hadoop fs -chmod -R 777 /data/hive/tmp

hadoop fs -chmod -R 777 /data/hive/log

hadoop fs -mkdir -p /spark-eventlog

修改hive配置文件

sudo vi hive-site.xml

配置文件如下

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?><!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

--><configuration>

<property>

<name>hive.exec.scratchdir</name>

<value>hdfs://127.0.0.1:9000/data/hive/tmp</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>hdfs://127.0.0.1:9000/data/hive/warehouse</value>

</property>

<property>

<name>hive.querylog.location</name>

<value>hdfs://127.0.0.1:9000/data/hive/log</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://127.0.0.1:3306/hive?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value></value>

</property>

<property>

<name>system:java.io.tmpdir</name>

<value>/tmp/hive/java</value>

</property>

<property>

<name>system:user.name</name>

<value>hadoop</value>

</property>

<property>

<name>hive.exec.local.scratchdir</name>

<value>/opt/hive/apache-hive-2.3.3-bin/tmp/${system:user.name}</value>

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/opt/hive/apache-hive-2.3.3-bin/tmp/${hive.session.id}_resources</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>/opt/hive/apache-hive-2.3.3-bin/tmp/root/operation_logs</value>

<description>Top level directory where operation logs are stored if logging functionality is enabled</description>

</property>

</configuration>

配置hive中jdbc的MySQL驱动

cd /opt/hive/apache-hive-2.3.3-bin/lib/

wget https://downloads.mysql.com/archives/get/p/3/file/mysql-connector-java-5.1.49.tar.gz

tar xvf mysql-connector-java-5.1.49.tar.gz

cp mysql-connector-java-5.1.49/mysql-connector-java-5.1.49.jar .

配置环境变量

sudo vi /opt/hive/apache-hive-2.3.3-bin/conf/hive-env.sh

export HADOOP_HOME=/opt/hadoop/hadoop-2.7.2

export HIVE_CONF_DIR=/opt/hive/apache-hive-2.3.3-bin/conf

export HIVE_AUX_JARS_PATH=/opt/hive/apache-hive-2.3.3-bin/lib

初始化schema

/opt/hive/apache-hive-2.3.3-bin/bin/schematool -dbType mysql -initSchema

初始化完成后修改MySQL链接信息,之后配置MySQL IP 端口以及放元数据的库名称

vi /opt/hive/apache-hive-2.3.3-bin/conf/hive-site.xml

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://127.0.0.1:3306/hive?characterEncoding=utf8&useSSL=false</value>

</property>

nohup hive --service metastore >> metastore.log 2>&1 &

nohup hive --service hiveserver2 >> hiveserver2.log 2>&1 &

验证安装

hive -e "show databases"

Spark安装

采用官方安装包安装,要求Spark版本对应如下

Spark(支持2.0以上所有版本) ,一键安装版本,需要2.4.3版本,安装的机器必须支持执行spark-sql -e "show databases" 命令

安装

tar xvf spark-2.4.3-bin-without-hadoop.tgz

sudo mkdir -p /opt/spark

sudo mv spark-2.4.3-bin-without-hadoop /opt/spark/

配置spark环境变量以及备份配置文件

cd /opt/spark/spark-2.4.3-bin-without-hadoop/conf/

cp spark-env.sh.template spark-env.sh

cp spark-defaults.conf.template spark-defaults.conf

cp metrics.properties.template metrics.properties

cp workers.template workers

配置程序的环境变量

vi spark-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.342.b07-1.el7_9.x86_64

export HADOOP_HOME=/opt/hadoop/hadoop-2.7.2

export HADOOP_CONF_DIR=/opt/hadoop/hadoop-2.7.2/etc/hadoop

export SPARK_DIST_CLASSPATH=$(/opt/hadoop/hadoop-2.7.2/bin/hadoop classpath)

export SPARK_MASTER_HOST=127.0.0.1

export SPARK_MASTER_PORT=7077

export SPARK_HISTORY_OPTS="-Dspark.history.ui.port=18080 -

Dspark.history.retainedApplications=50 -

Dspark.history.fs.logDirectory=hdfs://127.0.0.1:9000/spark-eventlog"

修改默认的配置文件

vi spark-defaults.conf

spark.master spark://127.0.0.1:7077

spark.eventLog.enabled true

spark.eventLog.dir hdfs://127.0.0.1:9000/spark-eventlog

spark.serializer org.apache.spark.serializer.KryoSerializer

spark.driver.memory 3g

spark.eventLog.enabled true

spark.eventLog.dir hdfs://127.0.0.1:9000/spark-eventlog

spark.eventLog.compress true

配置工作节点

vi workers

127.0.0.1

配置hive

cp /opt/hive/apache-hive-2.3.3-bin/conf/hive-site.xml /opt/spark/spark-2.4.3-bin-without-hadoop/conf

验证应用程序

/opt/spark/spark-2.4.3-bin-without-hadoop/sbin/start-all.sh

访问集群中的所有应用程序的默认端口号为8080

验证安装

spark-sql -e "show databases"

提示

Error: Failed to load class org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.

Failed to load main class org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.

You need to build Spark with -Phive and -Phive-thriftserver.

查找原因是因为没有集成hadoop的spark没有hive驱动,按网上的讲法,要么自己编译带驱动版本,要么把驱动文件直接放到jars目录。第一种太麻烦,第二种没成功,我用的第三种方法。下载对应版本集成了hadoop的spark安装包,直接覆盖原来的jars目录

tar xvf spark-2.4.3-bin-hadoop2.7.tgz

cp -rf spark-2.4.3-bin-hadoop2.7/jars/ /opt/spark/spark-2.4.3-bin-without-hadoop/

如果提示缺少MySQL驱动,可以将mysql-connector-java-5.1.49/mysql-connector-java-5.1.49.jar放入到spark的jars目录

如果本地没有相关驱动,执行下面脚本

cd /opt/spark/spark-2.4.3-bin-without-hadoop/jars

wget https://downloads.mysql.com/archives/get/p/3/file/mysql-connector-java-5.1.49.tar.gz

tar xvf mysql-connector-java-5.1.49.tar.gz

cp mysql-connector-java-5.1.49/mysql-connector-java-5.1.49.jar .

DataSphere Studio安装

准备安装包

unzip -d dss dss_linkis_one-click_install_20220704.zip

sudo yum -y install epel-release

sudo yum install -y python-pip

python -m pip install matplotlib

修改配置

用户需要对 xx/dss_linkis/conf 目录下的 config.sh 和 db.sh 进行修改

### deploy user

deployUser=hadoop

### Linkis_VERSION

LINKIS_VERSION=1.1.1

### DSS Web

DSS_NGINX_IP=127.0.0.1

DSS_WEB_PORT=8085

### DSS VERSION

DSS_VERSION=1.1.0

############## ############## linkis的其他默认配置信息 start ############## ##############

### Specifies the user workspace, which is used to store the user's script files and log files.

### Generally local directory

##file:// required

WORKSPACE_USER_ROOT_PATH=file:///tmp/linkis/

### User's root hdfs path

##hdfs:// required

HDFS_USER_ROOT_PATH=hdfs:///tmp/linkis

### Path to store job ResultSet:file or hdfs path

##hdfs:// required

RESULT_SET_ROOT_PATH=hdfs:///tmp/linkis

### Path to store started engines and engine logs, must be local

ENGINECONN_ROOT_PATH=/appcom/tmp

#ENTRANCE_CONFIG_LOG_PATH=hdfs:///tmp/linkis/ ##hdfs:// required

###HADOOP CONF DIR #/appcom/config/hadoop-config

HADOOP_CONF_DIR=/opt/hadoop/hadoop-2.7.2/etc/hadoop

###HIVE CONF DIR #/appcom/config/hive-config

HIVE_CONF_DIR=/opt/hive/apache-hive-2.3.3-bin/conf

###SPARK CONF DIR #/appcom/config/spark-config

SPARK_CONF_DIR=/opt/spark/spark-2.4.3-bin-without-hadoop/conf

# for install

LINKIS_PUBLIC_MODULE=lib/linkis-commons/public-module

##YARN REST URL spark engine required

YARN_RESTFUL_URL=http://127.0.0.1:8088

## Engine version conf

#SPARK_VERSION

SPARK_VERSION=2.4.3

##HIVE_VERSION

HIVE_VERSION=2.3.3

PYTHON_VERSION=python2

## LDAP is for enterprise authorization, if you just want to have a try, ignore it.

#LDAP_URL=ldap://localhost:1389/

#LDAP_BASEDN=dc=webank,dc=com

#LDAP_USER_NAME_FORMAT=cn=%s@xxx.com,OU=xxx,DC=xxx,DC=com

################### The install Configuration of all Linkis's Micro-Services #####################

#

# NOTICE:

# 1. If you just wanna try, the following micro-service configuration can be set without any settings.

# These services will be installed by default on this machine.

# 2. In order to get the most complete enterprise-level features, we strongly recommend that you install

# the following microservice parameters

#

### EUREKA install information

### You can access it in your browser at the address below:http://${EUREKA_INSTALL_IP}:${EUREKA_PORT}

### Microservices Service Registration Discovery Center

LINKIS_EUREKA_INSTALL_IP=127.0.0.1

LINKIS_EUREKA_PORT=9600

#LINKIS_EUREKA_PREFER_IP=true

### Gateway install information

#LINKIS_GATEWAY_INSTALL_IP=127.0.0.1

LINKIS_GATEWAY_PORT=9001

### ApplicationManager

#LINKIS_MANAGER_INSTALL_IP=127.0.0.1

LINKIS_MANAGER_PORT=9101

### EngineManager

#LINKIS_ENGINECONNMANAGER_INSTALL_IP=127.0.0.1

LINKIS_ENGINECONNMANAGER_PORT=9102

### EnginePluginServer

#LINKIS_ENGINECONN_PLUGIN_SERVER_INSTALL_IP=127.0.0.1

LINKIS_ENGINECONN_PLUGIN_SERVER_PORT=9103

### LinkisEntrance

#LINKIS_ENTRANCE_INSTALL_IP=127.0.0.1

LINKIS_ENTRANCE_PORT=9104

### publicservice

#LINKIS_PUBLICSERVICE_INSTALL_IP=127.0.0.1

LINKIS_PUBLICSERVICE_PORT=9105

### cs

#LINKIS_CS_INSTALL_IP=127.0.0.1

LINKIS_CS_PORT=9108

########## Linkis微服务配置完毕#####

################### The install Configuration of all DataSphereStudio's Micro-Services #####################

#

# NOTICE:

# 1. If you just wanna try, the following micro-service configuration can be set without any settings.

# These services will be installed by default on this machine.

# 2. In order to get the most complete enterprise-level features, we strongly recommend that you install

# the following microservice parameters

#

### DSS_SERVER

### This service is used to provide dss-server capability.

### project-server

#DSS_FRAMEWORK_PROJECT_SERVER_INSTALL_IP=127.0.0.1

#DSS_FRAMEWORK_PROJECT_SERVER_PORT=9002

### orchestrator-server

#DSS_FRAMEWORK_ORCHESTRATOR_SERVER_INSTALL_IP=127.0.0.1

#DSS_FRAMEWORK_ORCHESTRATOR_SERVER_PORT=9003

### apiservice-server

#DSS_APISERVICE_SERVER_INSTALL_IP=127.0.0.1

#DSS_APISERVICE_SERVER_PORT=9004

### dss-workflow-server

#DSS_WORKFLOW_SERVER_INSTALL_IP=127.0.0.1

#DSS_WORKFLOW_SERVER_PORT=9005

### dss-flow-execution-server

#DSS_FLOW_EXECUTION_SERVER_INSTALL_IP=127.0.0.1

#DSS_FLOW_EXECUTION_SERVER_PORT=9006

###dss-scriptis-server

#DSS_SCRIPTIS_SERVER_INSTALL_IP=127.0.0.1

#DSS_SCRIPTIS_SERVER_PORT=9008

###dss-data-api-server

#DSS_DATA_API_SERVER_INSTALL_IP=127.0.0.1

#DSS_DATA_API_SERVER_PORT=9208

###dss-data-governance-server

#DSS_DATA_GOVERNANCE_SERVER_INSTALL_IP=127.0.0.1

#DSS_DATA_GOVERNANCE_SERVER_PORT=9209

###dss-guide-server

#DSS_GUIDE_SERVER_INSTALL_IP=127.0.0.1

#DSS_GUIDE_SERVER_PORT=9210

########## DSS微服务配置完毕#####

############## ############## other default configuration 其他默认配置信息 ############## ##############

## java application default jvm memory

export SERVER_HEAP_SIZE="512M"

##sendemail配置,只影响DSS工作流中发邮件功能

EMAIL_HOST=smtp.163.com

EMAIL_PORT=25

EMAIL_USERNAME=xxx@163.com

EMAIL_PASSWORD=xxxxx

EMAIL_PROTOCOL=smtp

### Save the file path exported by the orchestrator service

ORCHESTRATOR_FILE_PATH=/appcom/tmp/dss

### Save DSS flow execution service log path

EXECUTION_LOG_PATH=/appcom/tmp/dss

脚本安装

cd xx/dss_linkis/bin

sh install.sh

等待安装脚本执行完毕,再进到linkis目录里修改对应的配置文件

修改linkis-ps-publicservice.properties配置,否则hive数据库刷新不出来表

linkis.metadata.hive.permission.with-login-user-enabled=false

拷贝缺少的jar

cp /opt/hive/apache-hive-2.3.3-bin/lib/datanucleus-* ~/dss/linkis/lib/linkis-engineconn-plugins/hive/dist/v2.3.3/lib

cp /opt/hive/apache-hive-2.3.3-bin/lib/*jdo* ~/dss/linkis/lib/linkis-engineconn-plugins/hive/dist/v2.3.3/lib

安装完成后启动

sh start-all.sh

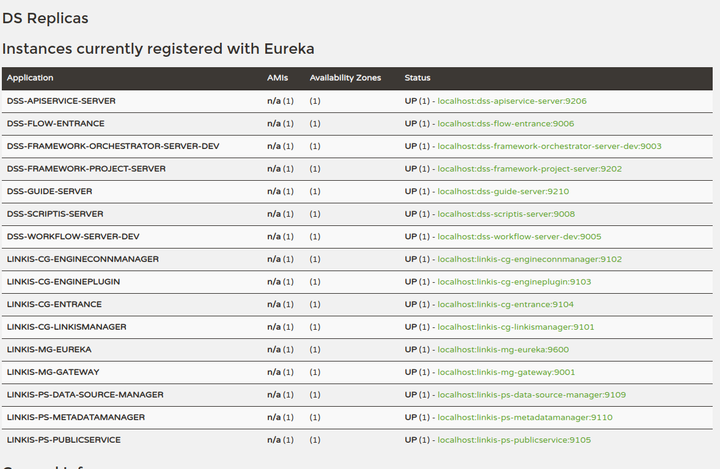

启动完成后eureka注册页面

最后一个坑,前端部署完会报权限错误,把前端迁移到opt目录,记得修改nginx配置

sudo cp -rf web/ /opt/

最后系统启动完毕